Discovered Clawdbot

Discovered Clawdbot and exploring how it can help me with various tasks around here.

I live in the south of England with my wife, two children and our dog Nova. I work at IBM as a Cloud Architect for API Connect cloud services. I enjoy running, walking, photography and spending time with my family.

You can find me elsewhere on the web- github- bluesky- instagram- mastodon- threads- linkedinDiscovered Clawdbot and exploring how it can help me with various tasks around here.

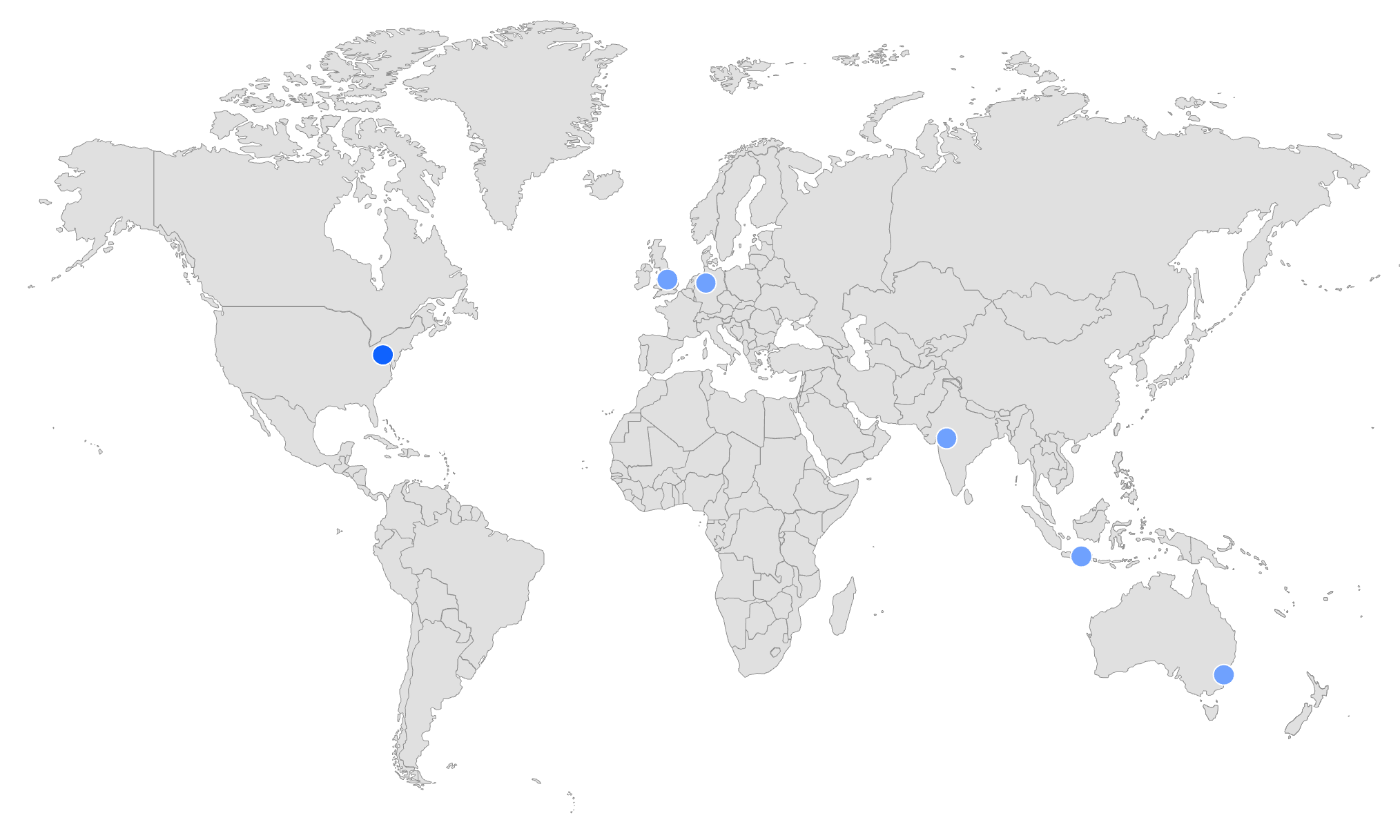

If you have customers around the world, serving your APIs from a global footprint significantly improves their experience by reducing latency and increasing reliability. With API Connect's multi-region capabilities, you can ensure users call your APIs from locations closest to them, providing faster response times and better resilience against regional outages.

In this guide, I'll walk through deploying APIs to the 6 current regions of the API Connect Multi-tenant SaaS service on AWS. At the time of writing, these regions are:

I'll use N. Virginia as the initial source location and demonstrate how to synchronize configuration across all regions.

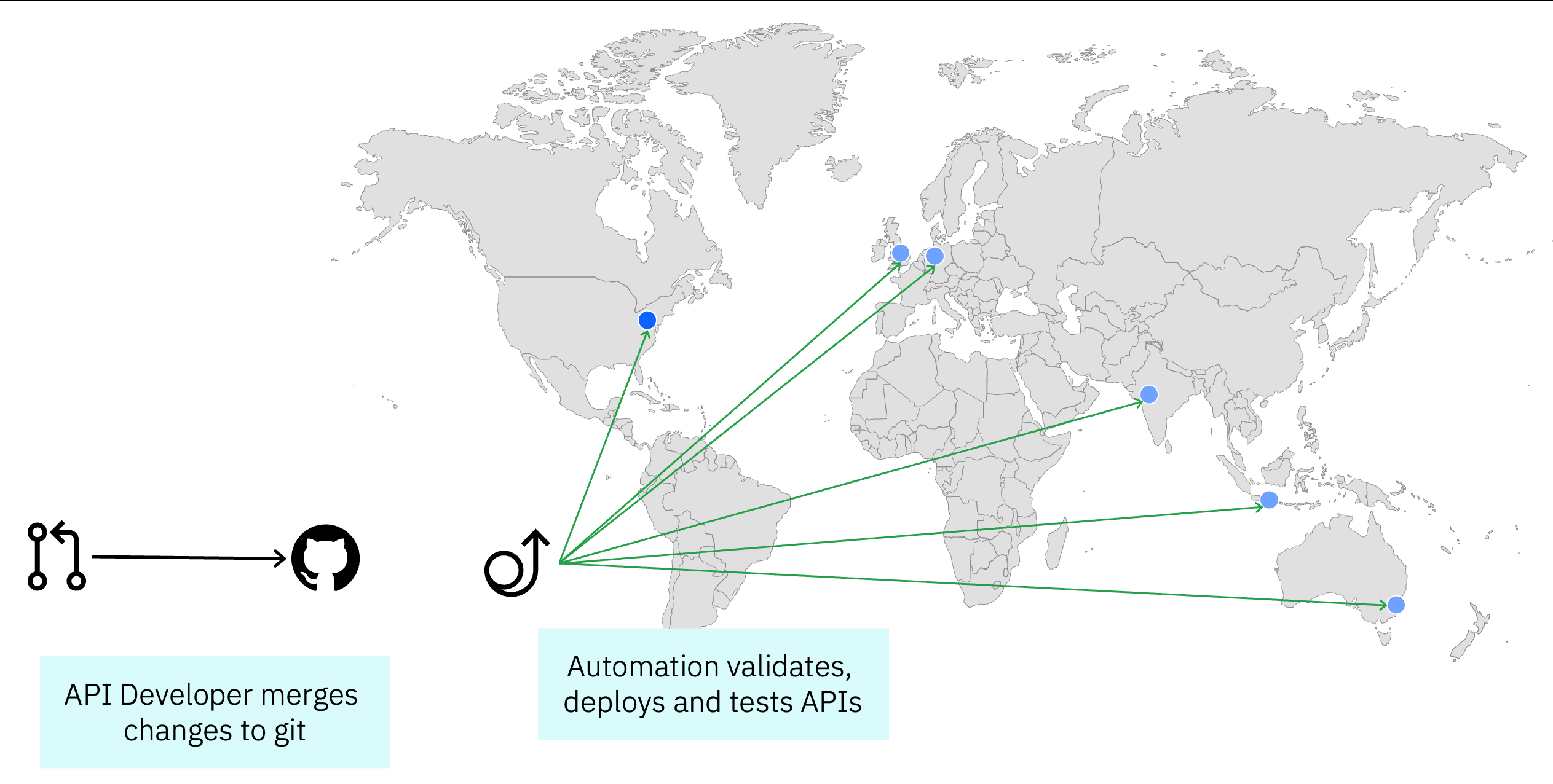

To maintain consistency across regions, you'll need a reliable deployment pipeline. This pipeline should handle the deployment of APIs and products to all regions whenever changes are made to your source code repository.

You can build this pipeline using either:

This automation ensures that whenever you merge changes to your main branch, your APIs and products are consistently deployed across all regions without manual intervention.

Before configuring your global deployment, you'll need to:

Pro Tip: Each paid subscription for API Connect SaaS includes up to 3 instances which can be distributed across the available regions as needed. For a truly global footprint covering all 6 regions, you'll need two subscriptions.

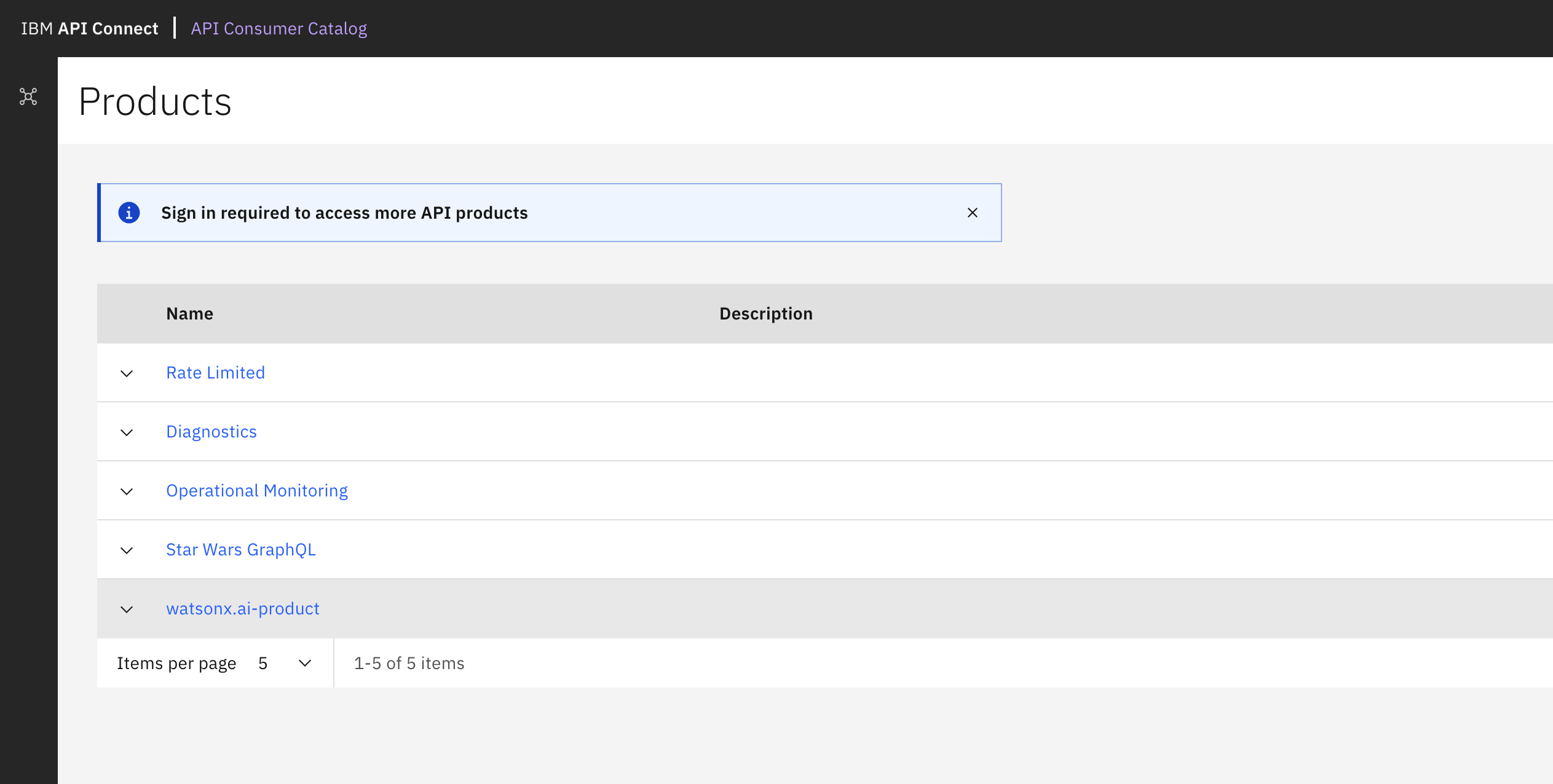

For developer engagement, you'll need a portal where API consumers can discover and subscribe to your APIs. In my implementation, I chose the new Consumer Catalog for its simplicity and ease of setup.

While I didn't need custom branding for this example, I did enable approval workflows for sign-ups. This allows me to:

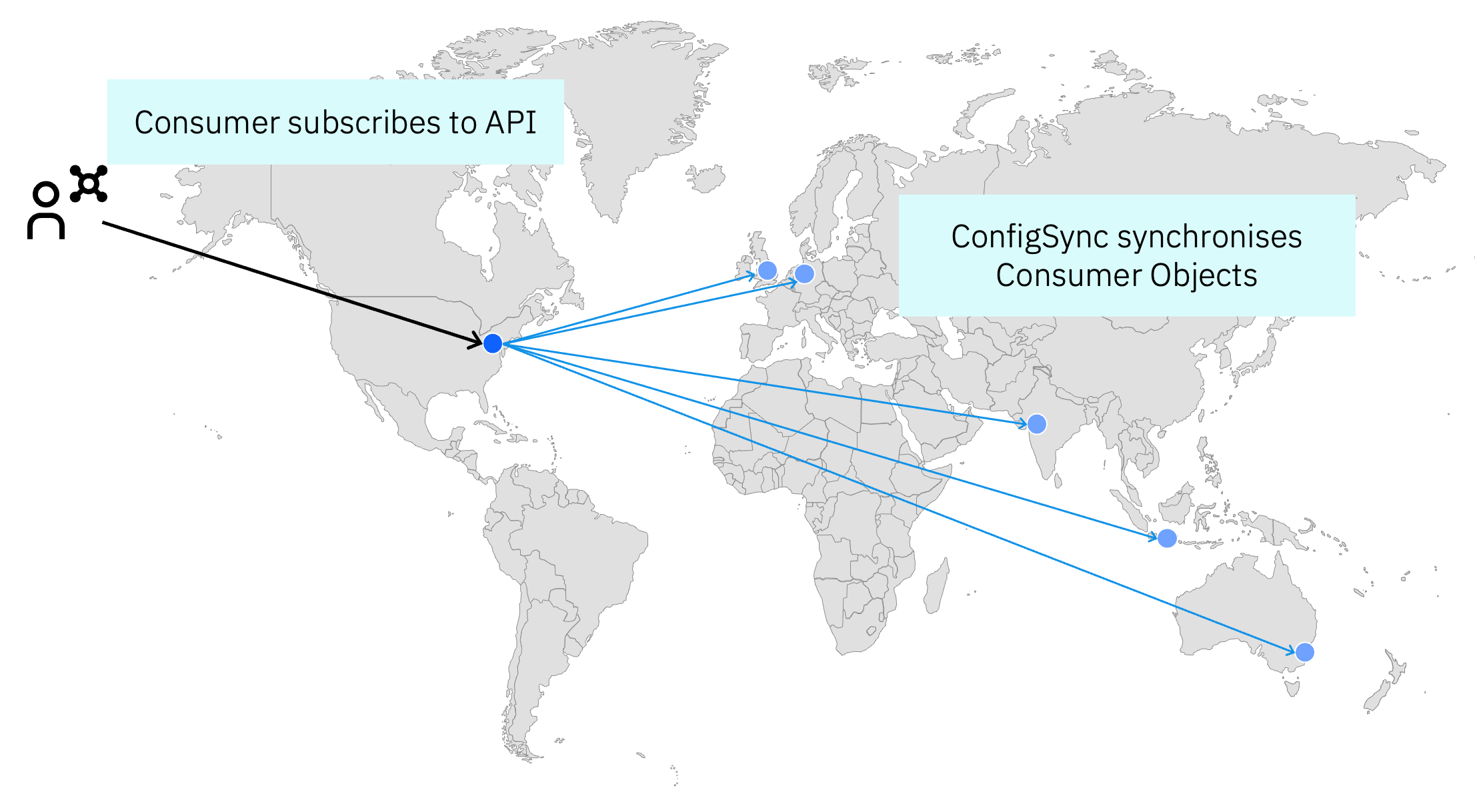

The key to maintaining consistency across regions is ConfigSync, which pushes configuration changes from your source region to all target regions. Since ConfigSync operates on a source-to-target basis, you'll need to run it for each target region individually.

My implementation uses a bash script that:

Here's the script I use:

#!/bin/bash

# US East is always the source catalog

export SOURCE_ORG=ibm

export SOURCE_CATALOG=production

export SOURCE_REALM=provider/default-idp-2

export SOURCE_TOOLKIT_CREDENTIALS_CLIENTID=599b7aef-8841-4ee2-88a0-84d49c4d6ff2

export SOURCE_TOOLKIT_CREDENTIALS_CLIENTSECRET=0ea28423-e73b-47d4-b40e-ddb45c48bb0c

# Set the management server URL and retrieve the API key for the source region

export SOURCE_MGMT_SERVER=https://platform-api.us-east-a.apiconnect.automation.ibm.com/api

export SOURCE_ADMIN_APIKEY=$(grep 'us-east-a\:' ~/.apikeys.cfg | awk '{print $2}')

# Set common properties for all targets - in SaaS the toolkit credentials are common across regions.

export TARGET_ORG=ibm

export TARGET_CATALOG=production

export TARGET_REALM=provider/default-idp-2

export TARGET_TOOLKIT_CREDENTIALS_CLIENTID=599b7aef-8841-4ee2-88a0-84d49c4d6ff2

export TARGET_TOOLKIT_CREDENTIALS_CLIENTSECRET=0ea28423-e73b-47d4-b40e-ddb45c48bb0c

# Loop through the other regions to use as sync targets

# Format: eu-west-a (London), eu-central-a (Frankfurt), ap-south-a (Mumbai),

# ap-southeast-a (Sydney), ap-southeast-b (Jakarta)

stacklist="eu-west-a eu-central-a ap-south-a ap-southeast-a ap-southeast-b"

for stack in $stacklist

do

# Set the target management server URL for the current region

export TARGET_MGMT_SERVER=https://platform-api.$stack.apiconnect.automation.ibm.com/api

# Retrieve the API key for the current region from the config file

export TARGET_ADMIN_APIKEY=$(grep "$stack\:" ~/.apikeys.cfg | awk '{print $2}')

# Run the ConfigSync tool to synchronize configuration from source to target

./apic-configsync

done

For managing API keys, I store them in a configuration file at ~/.apikeys.cfg where each line contains a region-key pair in the format region: apikey. This approach keeps sensitive credentials out of the script itself - for a more production ready version this api key handling would be handed off to a secret manager.

After setting up your global deployment, it's crucial to verify that everything works correctly across all regions. Follow these steps:

Test the source region first:

Verify ConfigSync completion:

Test each target region:

Monitor for any issues:

Once your global API deployment is working, consider these enhancements:

A global API deployment strategy with API Connect provides significant benefits for organizations with worldwide customers. By following the approach outlined in this guide, you can:

While setting up a global footprint requires some initial configuration, the long-term benefits for your API consumers make it well worth the effort.

Last week I had the opportunity to attend the three-day Product Academy for Teams course at the IBM Silicon Valley Lab in San Jose.

This brought together members of our team from across different disciplines - design, product management, user research, and engineering. It was fantastic to spend time face to face with other members of the team that we usually only work with remotely and to all go through the education together learning from each others approaches and ideas. The API Connect team attendees were split into three smaller teams to work on separate items and each was joined by a facilitator to help us work through the exercises.

We spent time together learning about the different phases of the product development lifecycle and in each looking at the process some of the best practices and ways to apply them to our product. It was particularly effective to use real examples from our roadmap in the exercises so we could collaboratively apply the new approaches and see how they apply directly to our product plan.

Each day of the course looked at a different phase of the product development lifecycle - Discovery, Delivery and Launch & Scale:

Discovery - Are we building the right product? - looking at and assessing opportunities and possible solutions we could offer for them, using evidence to build confidence and reviewing the impact this would have on our North Star Metric.

Delivery - Are we building it right? - ensuring we have a clear understanding of the outcomes we're looking for, how we can achieve them and how we can measure success.

Launch & Scale - Are customers getting value? - ensuring we enable customers to be successful in their use of the product and that we are able to get feedback and data to measure this and improve.

Each of these phases has an iterative approach to it and we looked at how we could apply these to our product plan. We also looked at some of the tools and techniques that can be used to help us apply this and members from the different product teams attending shared how they are using these today.

On the final day of the course I also had the opportunity to share some of our journey with instrumentation, how this has evolved and some of the lessons we learnt along the way - such as the benefits of having a data scientist on the team. I am looking forward to sharing this with the wider team and seeing how we apply some of the learning to improve our systems going forward. For example, better validation of decisions through measuring and improving our use of data.

I headed up from San Francisco to Mount Tamalpais State Park to explore the area and see some of the sights before heading down to San Jose. I drove through the spectacular scenary of the State Park heading up above the clouds and winding round the mountains to the East Peak car park and then heade out along the Verna Dunshee trail around the peak - the views were amazing and the scenery was spectacular. I then decided to head up to the top along the Plank Walk trail to see the views from up by the fire lookout at 2571 feet.